We’ve had a remarkable response in the two weeks since we published Surgeon Scorecard. The online database has been viewed more than 1.3 million times by people looking up doctors. Surgeons and others pored over the intricacies of the data and methodology. Some praised it as a long overdue, transformative step that will protect patients and spur change. Others were sharply critical, pointing to limitations in the data and what they viewed as flaws in the analysis.

Perhaps the most striking response, though, came from one of our readers, the husband of a nursing supervisor at a medical/surgical unit in a respected Southwestern hospital.

“When my mother required gallbladder surgery, my wife specifically ensured that a certain surgeon wasn’t on call for the procedure,” he wrote. “While I was at the hospital visiting my wife, I mentioned casually to two of her coworkers (separately) that my mother was upstairs awaiting surgery. Both nurses asked cautiously who was on call and when they found out it was Dr. [redacted] ... they breathed a sigh of relief.”

That doctor that hospital insiders protected their loved ones from? The nurses called him “Dr. Abscess.”

For decades, the shortcomings of the nation’s Dr. Abscesses have been an open secret among health care providers, hidden from patients but readily apparent to those with access to the operating room or a hospital’s gossip mill.

The author of this email, whose name we’re omitting for obvious reasons, asked his wife to rate the surgeons she assisted every day, and compared her thoughts to what we reported in Surgeon Scorecard. “I randomized the names as I listed them to her, and she hadn’t read about the Scorecard and didn’t know anything about the results. She simply told me her choice for the best and worst and was right across the board.’’

Dr. Abscess? He had the highest complication rate of any doctor operating at the hospital.

Surgeon Scorecard marks ProPublica’s first attempt to make data available about surgeons. Like any first attempt, version 1.0 can be improved, and we plan to do so in the coming months.

But it’s worth noting that some of the most frequently cited concerns reflect basic misunderstandings about how Surgeon Scorecard works. I’d like to address some those questions in detail.

There isn’t enough data to learn anything useful about individual surgeons.

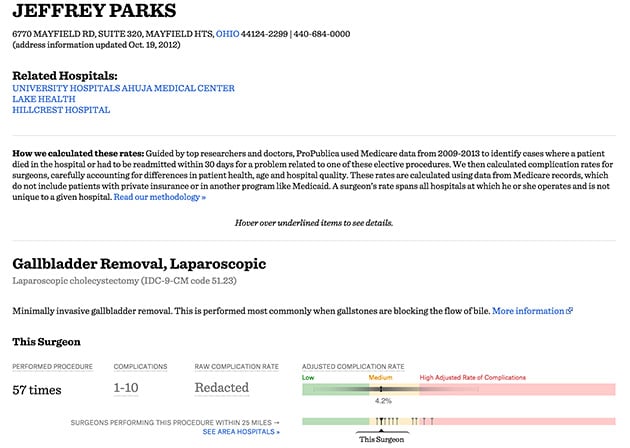

Many critics have noted that Surgeon Scorecard draws only on records of procedures paid for by Medicare’s fee-for-service program. “I can’t reiterate enough the paucity of the data that is analyzed,’’ Dr. Jeffrey Parks, a general surgeon in Solon, Ohio, wrote in one typical critique.

In fact, study after study of the nation’s health care system by some of academe’s most prestigious scholars draws on similar data from Medicare. Medical research is typically done using far smaller groups to represent even larger populations. Even in large clinical trials, new drugs are tested on a few thousand people who serve as stand-ins for all 320 million Americans.

Overall, Surgeon Scorecard looked at 2.3 million surgical procedures and rated nearly 17,000 doctors. The complication rates we’ve computed for surgeons have been adjusted to control for each patient’s age and health, and the differences among the hospitals where they work. For each doctor, the rate is reported in a range called a confidence interval.

The width of a surgeon’s interval can vary depending on how much information is in our data about him or her. Surgeons who do a large number of Medicare operations requiring overnight stays will have a narrower interval that hones in more precisely on their true performance. Surgeons who’ve done fewer operations have a wider range. Our critics have said this means we can’t know anything about how they compare to peers.

It would be great if every surgeon did thousands of operations. That would make differences between surgeons more clear cut. But patients have to choose now based on the procedures actually performed. The complication rates we report are the most likely based on what we can know. They give a strong signal that some surgeons perform better than others, even if the differences aren’t always as conclusive as some might like.

You can read the calculations that support that finding here.

Parks and other general surgeons have raised a reasonable question about gall bladder removals, one of the eight elective procedures we studied. Because of the way Medicare compiles billing records, our data only includes patients whose operation required an overnight hospital stay. Many doctors also perform this procedure on an outpatient basis.

There is no question that patients would know more if we could publish complication rates that include the outpatient procedures. We are now trying to obtain the records needed to do this.

Counting readmissions to the hospital and deaths is not a fair way to track surgeon performance since Medicare data does not say who precisely was at fault.

We thought a lot about this issue when we created Surgeon Scorecard and consulted closely with a number of experts, including surgeons who do the eight procedures we studied. The consensus was that a surgeon in the operating room was comparable to a captain of a ship – remunerated and accountable for his or her leading role in the care of patients.

The American College of Surgeons has historically embraced that view, declaring in 1996 that surgeons are “responsible” for both the “safe and competent performance of the operation” and all aspects of “postoperative care.’’

“The best interest of the patient is thus optimally served because of the surgeon’s comprehensive knowledge of the patient’s disease and surgical management,’’ the college said.

In a 2009 statement on patient safety, the group said it was the surgeon’s “responsibility’’ to oversee preoperative preparation; “perform the operation safely and competently;” plan the method of anesthesia with the anesthesiologist; and deliver postoperative care including “personal participation in the direction of this care and management of any postoperative complications should they occur.’’

Last week, the College of Surgeons appeared to shift its stance, suggesting that surgeon ratings should not be published unless it were clear which member of the surgical team was to blame for complications that led to readmissions or deaths.

Responding to Surgeon Scorecard and an effort by the website Consumers' Checkbook, the group described surgery as a “team experience” in which doctors work closely with surgical nurses and an anesthesiologist. “Many factors and many individuals contribute to the surgical outcome,’’ the group said. “Rating a surgeon’s skill in performing a particular operation, without factoring in those other considerations, leads to an incomplete analysis.’’

Harvard health policy researcher Ashish Jha, among others, offered a persuasive response to this argument. While noting that Surgeon Scorecard is clearly “imperfect,’’ he argued in a recent blog post that it is still a marked improvement. Jha told the story of a patient he called “Bobby,’’ who had suffered recurrent lung infections after an operation. Bobby chose his surgeon after consulting a friend who had undergone a similar procedure.

Jha, who informally advised us on Surgeon Scorecard, wrote that patients and health care providers should assess new tools in that context. “The right question is – will it leave Bobby better off? I think it will. Instead of choosing based on a sample size of one (his buddy who also had lung surgery), he might choose based on sample size of 40 or 60 or 80. Not perfect. Large confidence intervals? Sure. Lots of noise? Yup. Inadequate risk-adjustment? Absolutely. But, better than nothing? Yes. A lot better.’’

More broadly, readmissions to the hospital do not always arise from surgical complications. Sometimes doctors readmit patients to the hospital out of an abundance of caution, and they are quickly released.

As noted, we tried to incorporate clinical judgment in our model, asking doctors, including surgeons who performed each type of procedure, to identify only particular complications that were most likely the result of the initial surgery. Each was chosen by the agreement of at least five experts. When a majority did not agree, we omitted those types of complications. We ended up with a list of more than 300 complications. When a diagnosis on that list was the reason for a hospital readmission within 30 days of surgery, we counted it as a complication in our scorecard.

On average, the patients in Surgeon Scorecard who were readmitted to the hospital stayed for five days. The most common reasons for readmission were postoperative infections, followed by clots, bad reactions to artificial joints or implants and blood poisoning. We feel comfortable that the vast majority of these patients are not being treated for minor aches and pains.

If you look hard at Surgeon Scorecard, you’ll find some doctors whose rate of complications could be anywhere from low to medium to high. How could that possibly help patients?

This issue was also raised by Dr. Parks, who pointed out that the online representation of his complication rate for gall bladder surgery touched all three categories. He’s right.

But that doesn’t mean one can’t glean valuable information about Parks.

Click on Parks. The chart shows a rate of complications for gallbladder surgery of 4.2 percent. That puts him second-lowest among the 10 doctors in our data performing that procedure within a 25-mile radius of him.

Because Parks has done a relatively small number of these procedures paid for by Medicare on patients hospitalized overnight, his confidence interval is wide. At its right edge – a very improbable outcome for Parks – the interval enters a zone we’ve defined as high. At its similarly improbable left edge, it enters a zone of low complication rates.

What is a patient to conclude? That Parks is most likely better than average, and that he most likely outperforms his peers.

Does that mean patients should automatically pick him over, say, the surgeon whose rate of complications makes him 10th out of 10? Not necessarily. But it means that a patient considering a gall bladder removal can have a more informed conversation. We recommend asking your doctor how often he or she performs the surgery you need and whether complications are tracked.

Some doctors have found the lack of absolute certainty about their complication rates frustrating. That’s understandable.

This concept is similar to the idea of margins of error in political polling. When there’s a five-point margin of error, a poll that shows Hillary Clinton running three percentage points ahead of, say, Jeb Bush, has a number of possible meanings, including that Bush is actually ahead of Clinton. But the most likely result is that she is three points ahead.

What our critics are saying, in effect, is that no poll should be published until one of the candidates builds a lead that is larger than the margin of error.

We just don’t agree.

The medical profession already has a sophisticated tool for tracking outcomes that is available to hospitals and draws on more detailed patient records. Isn’t that enough?

The National Surgeon Quality Improvement Program, known as NSQIP, collects reams of useful clinical data. But virtually none of it is shared with patients.

The system, which is run by the American College of Surgeons, is entirely voluntary. Hospitals that join agree to provide information about surgeons, patients and medical outcomes. In exchange, they get state of the art advice on reducing errors and improving results. According to the college, hospitals that become part of NSQIP can, on average, expect to avoid 250 to 500 complications and save 12 to 36 lives annually.

That sounds like the easiest decision a hospital could make to protect its patients. But after two decades, only about 650 of America’s 4,000 acute-care hospitals have agreed to provide their data to the program.

To Harvard’s Jha, this underscores the need for tools like Surgeon Scorecard. “No hospital that I’m aware of makes its NSQIP data publicly available in a way that is accessible and usable to patients,’’ he wrote. Nor are government websites listing hospital and physician information much help. “A few put summary data on Hospital Compare,” Jha wrote, “but it’s inadequate for choosing a good surgeon.

“Why are the NSQIP data not collected routinely and made widely available? Because it’s hard to get hospitals to agree to mandatory data collection and public reporting. Obviously those with the power of the purse — Medicare, for instance — could make it happen. They haven’t.’’

And that’s why we’ve created Surgeon Scorecard. The ability to ask questions – and perhaps steer clear of Dr. Abscess – is something that should be available to every patient.